This blog uncovers the mystery of how to create A/R prepayments that are associated to their O/E documents and can be accessed via the drill down button on the A/R receipt entry screen. The logic described and presented in the blog applies equally to O/E orders, shipments and invoices.

Creating a prepayment with an O/E document is difficult for a few reasons:

- Macro recording does not work; you need to use RVSPY to understand view calls.

- Creating a prepayment requires manipulation of three separate, un-composed views and due to the lack of composition each view requires each field to be set individually.

There are three rough steps to creating a prepayment:

- Create an A/R receipt batch

- Create an O/E prepayment record.

- Update the O/E transaction header view.

Full source code is available for download at the bottom of this post.

A/R Receipt Batch

Firstly, it is necessary to either create a new A/R receipt batch or use a currently open batch. Creating the batch is relatively straight forward and really only requires you to set the bank account. Note that you do not need to compose each of the receipt views since you are not creating any receipt entries directly.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

'Open the A/R Receipt Batch View Dim ARReceiptBatch As AccpacView mDBLinkCmpRW.OpenView "AR0041", ARReceiptBatch 'Create a new batch ARReceiptBatch.Fields("CODEPYMTYP").PutWithoutVerification "CA" ARReceiptBatch.Fields("CNTBTCH").PutWithoutVerification 0 ARReceiptBatch.Init 'Set the bank to which the receipt will be entered. ARReceiptBatch.Fields("IDBANK").PutWithoutVerification sBank 'Set the bank currency...if you weren't setting this and were using the default 'bank account currency you can obtain it from the view. If sBankCur <> vbNullString Then ARReceiptBatch.Fields("CODECURN").Value = sBankCur Else sBankCur = ARReceiptBatch.Fields("CODECURN").Value End If 'Set a batch description ARReceiptBatch.Fields("BATCHDESC").Value = "Prepayment Batch from O/E" 'Update the batch ARReceiptBatch.Update |

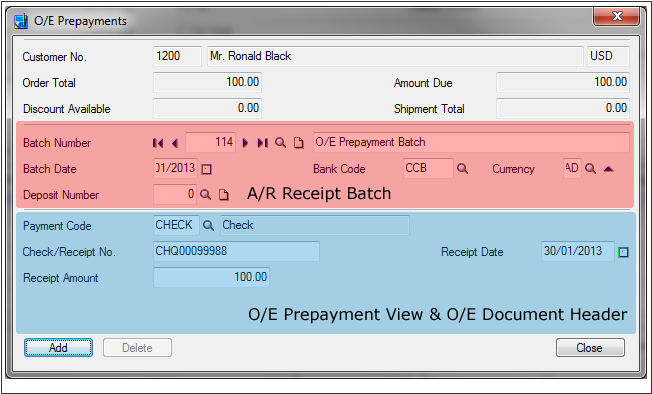

Update the O/E Prepayment View

The second step is to create the O/E prepayment record. The prepayment record is responsible for creating A/R receipt entry and it also associates the receipt to the O/E order so that there is a drill down against the prepayment.

Each O/E transaction (Order, Shipment & Invoice) has a separate prepayment view where each is identical with the exception of the view id. Therefore the logic used here is applicable to each.

If you are familiar with Sage300 development, most of the views are nicely programmed where a lot of hard work is performed behind the scenes by the view. Unfortunately, the prepayment views are the complete opposite! They do not have any default values either from the customer or order, so it is necessary to explicitly set each field.

The code sample below illustrates this, where it’s necessary to set the Customer, Customer Name & Customer Currency all individually despite setting the Customer Id.

|

1 2 3 4 5 6 7 8 9 |

Dim OrdPrepayments As AccpacView mDBLinkCmpRW.OpenView "OE0530", OrdPrepayments OrdPrepayments.Init 'Associate the prepayment with order OrdPrepayments.Fields("ORDUNIQ") = OEOrdHeaderFields("ORDUNIQ").Value OrdPrepayments.Fields("CUSTOMER").Value = OEOrdHeaderFields("CUSTOMER").Value OrdPrepayments.Fields("CUSTDESC").Value = OEOrdHeaderFields("BILNAME").Value OrdPrepayments.Fields("CUSTCURN").Value = OEOrdHeaderFields("ORSOURCURR").Value |

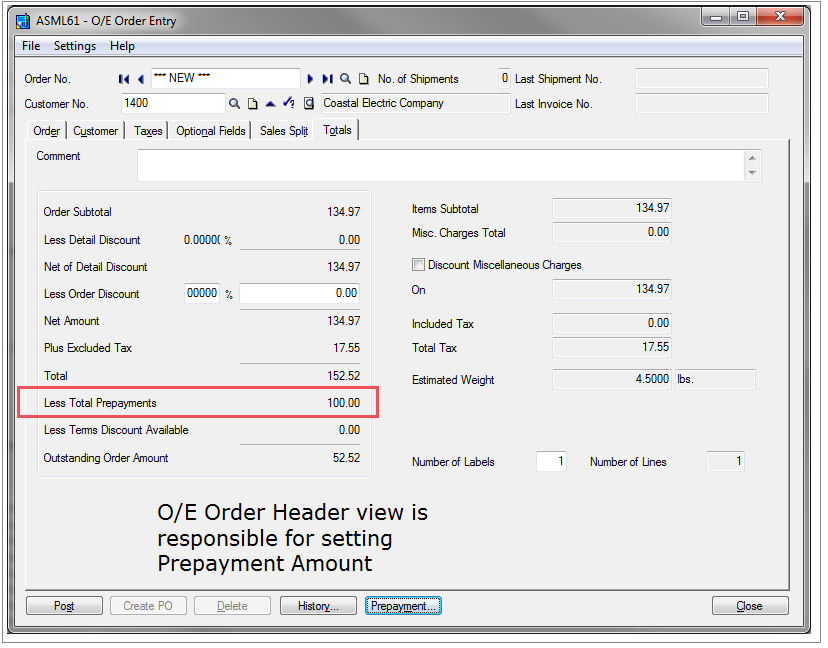

Update O/E Header View

The final step is to update the prepayment fields on the O/E transaction header view, which update the Total Prepayments field on the order’s Totals Tab.

The attached code is a full working sample illustrating how to create and attach a prepayment and should work for Sage300 versions 5.6 and above.

Download Sage 300 OE Prepayment source codeDon’t think that all of your data is going to import every time, if you’re lucky some of it might some of the time.

When designing or writing your integration application it is essential that you expect errors and, as a result, design your program with the possibility for errors at the forefront of what you’re trying to achieve. In fact errors are probably just as critical as the data itself, if not more so.

This blog describes our best practices for designing error handling and logging within an integration.

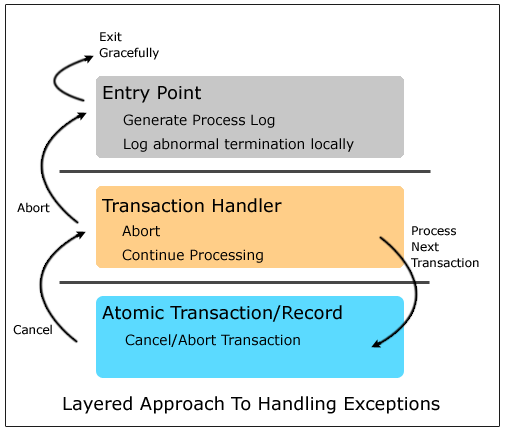

Design Pattern – The Layered Approach to Error Handling

In a previous position I had the unenviable task of having to maintain several of a colleague’s poorly written import programs. Each time I made a modification I would re-factor a certain part of the program just for my own sanity and in conjunction I maintained several integrations I’d written myself. Over time each import converged to a similar structure, all based upon the way the integration handled errors.

The resulting pattern was a 3, sometimes 4, layer structure where each layer had its own error handling responsibilities.

- Atomic transaction: This is the inner most layer of your integration and is responsible for managing a single ‘atomic’ transaction. On failure it is this layer which cancels or rolls-back any half written transaction.

- Transaction handler: The transaction handler is essentially a loop which calls into the ‘atomic’ transaction handler. When the atomic handler raises an error, this layer needs to decide how to proceed: whether to move to the next transaction or abort.

- Task handler (optional): When your integration interfaces with anything more than a single end-point the task handler manages the handling of exceptional and non-exceptional data. Where a transaction succeeds, it needs to be submitted to the next end-point, whereas a failure usually means that it should not be processed at the next.

- Entry point: The outermost layer to your application. It is responsible for:

[inner-list list=”1″] [inner-list-el double=”1″]Generation of the end user log or processing report.[/inner-list-el] [inner-list-el double=”1″]Catching and logging any unexpected or catastrophic errors.[/inner-list-el] [/inner-list]

Atomic transactions

It goes without saying that whenever an error occurs in your automated integration it shouldn’t leave half written, partial transactions, database records, or files.

Sometimes however the concept of a transaction needs some thought, and requires input from business requirements, the technology of the platform and the ability to re-process data.

For example, should a batch of cash transactions into an accounting system be imported in an all-or-nothing approach, or should the integration import the ones it can, omitting the erroneous transactions? This scenario has implications on where the batch is posted; without the erroneous transactions, it may affect the bank reconciliation function at a later point.

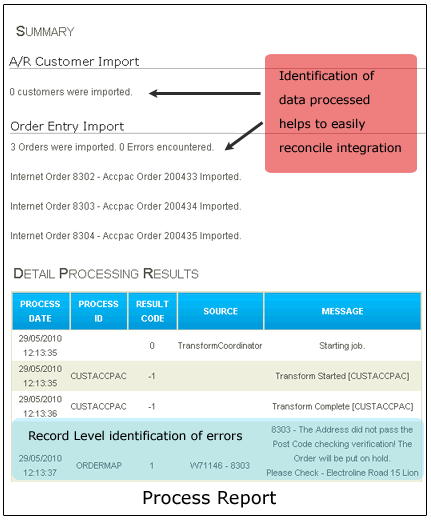

Logging types

In our experience there are two audiences for the logs of any integration: end-users and technical staff. Users will want something they can understand, and technical people want something they can use to debug or understand a problem.

End-user reports

- Are sent to the people responsible for monitoring any integration/data feed.

- Should be in readable, easily digested format.

- Should allow the user to be able to quickly reconcile data passed between applications.

- Should provide the user enough information to allow them to identify and/or resolve the issue, or at least identify any erroneous data.

Catch all & Catastrophic error logging

- Used to catch unexpected errors and exceptions, useful for technical staff to help identify isolated issues.

Catastrophic errors are simply those you didn’t plan; for example, a loss of network connection; a corrupted file; a sudden disconnection from a database; an untimely permissions change; or out of memory exception.

In contrast, an invalid item code on a sales order import is not catastrophic; it should be handled by your standard logging mechanism and reported on your end-user reports. - These errors should be logged locally to either a local file or local database, windows event log. Don’t rely on remote resources such as remote databases or email as network availability could be limited or the cause.

Runtime logging

Errors, warnings & messages should be persisted as early as possible, which means writing to a database or file as and when they occur. Should a catastrophic error occur you have a process log, a trace, which would otherwise be lost if they were held in memory and persisted at the end of the process.

Log libraries

It is worth mentioning that there are a number of open source logging and tracing libraries available such as nlog, log4net, the .net trace libraries and Microsoft’s Enterprise framework which can provide you with a robust and feature rich way for persisting errors and warnings to a number of mediums. If you decide to use these, as opposed to rolling-your-own log scheme, the libraries can require some understanding to configure & setup and their outputs are not suited for end users.

Generating the end user process report

At the conclusion of processing it is necessary to generate the process report for the end users. If you’ve followed our recommendations, this should mean re-querying the medium to which the errors were persisted during processing and re-formatting them into an easy to read format.

The best and easiest format to send the end users a process report is email for the following reasons:

- Everyone has email.

- Portable and amendable: Annotations can be made and forwarded to others if remedial action needs to be made by another person.

- Logs can be easily formatted with html and presented nicely.

Summary

Error handling and logging is a key component of any application, more so with integration! Without proper error logging and reporting, your organisation will at best spend needless time resolving and reconciling data between applications and at worst lose customers because of poor service and lost transactions.

We hope this has been of use to some of you and if you ask us nicely, we may just provide you with some samples and template code illustrating the best practices we’ve described.

In this blog we take a deep dive into the mechanics of using the Sage reporting engine in an external application using the Sage200 SDK for both single and multi-threaded applications. Topics covered:

- Correctly referencing the Sage reporting engine

- Using the Sage reporting engine within a service

- Use the Sage reporting engine in a multi-threaded application

- The Sage200 Security Context

- Multi-threaded use of the Sage200 Application object

Full source code is available at the bottom of the blog.

Background

When we added to IMan functionality to print various Sage200 forms we encountered a number of issues during development, deployment and use in a production environment.

This blog will attempt to uncover and present the solution to these issues. We hope that this will help other Sage developers who are grappling with the reporting engine complexities.

External printing with Sage Report Engine

The first phase to adding print support to our application was to provide support for printing forms such as invoices, picking lists, etc.

The biggest hurdle we faced was the correct referencing of various Sage libraries and dependencies.

References

- Copy Program FilesSage200 Unity.config to your local bin directory.

- Reference the following assemblies and ensure that NONE are copied to your local bin directory.

- Sage.Reporting.Services

- Sage.Reporting.Model

- Sage.Reporting.Engine.Integration

- Sage.Query.Engine.Model

- Sage.Query.Engine

- These assemblies are located in various places on a workstation with Sage200 installed; some are in the SDK, some are in the GAC, some are in the Sage installation directory. For those assemblies stored in the GAC we extract them using GAC View.

Setting the Printer at Runtime

At the time of writing (an enhancement request has been logged with Sage) the Sage Reporting Engine does not have any facility to set the printer at runtime. It can however be set by traversing the internal object structure of the ReportEngine object.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 |

/// <summary> /// Prints a report to a specific printer or exports a report to a file. /// </summary> /// <param name="exportFilePath">The file to export the report to, can be empty or /// null if the report is being printed.</param> /// <param name="exportService">The IExportService i.e. this object.</param> /// <param name="flags">Should always be set to SuppressUserInteraction.</param> /// <param name="exportType">The type of file if the report is being exported or /// prn if being printed.</param> /// <param name="printerName">The local printer name if the report is being /// printed.</param> /// <returns>True...not sure of the signifigance as it's undocumented.</returns> private bool PrintReport(string exportFilePath, IExportService exportService, ExportFlags flags, ExportType exportType, string printerName) { Type exporterType = exportService.GetType(); PropertyInfo reportsPropertyInfo = exporterType.GetProperty("Reports", System.Reflection.BindingFlags.Public | System.Reflection.BindingFlags.Instance); IList reports = reportsPropertyInfo.GetValue(exportService, null) as IList; // Should never be zero, but just in case. if (reports.Count == 0) { throw new Exception("No reports in the layout file"); } // Get the ReportBatchItem var batchItem = reports[0]; // Get the report. var reportPropertyInfo = batchItem.GetType().GetProperty("Report"); // Get the actual report object. Sage.Reporting.Model.IReport report = reportPropertyInfo.GetValue(batchItem, null) as Sage.Reporting.Model.IReport; // Set the printer. // Sometimes the PrinterSettings can be null (usually when the report is // saved with a printer which is not installed/available under the user // profile which the report is being run. if (report.PaperLayout.PrinterSettings == null) { System.Drawing.Printing.PrinterSettings printSettings = new System.Drawing.Printing.PrinterSettings(); // Set the printer name before setting the object to the PrinterSettings // property. // Setting the object to the property fails, with the property remaining // null. printSettings.PrinterName = printerName; report.PaperLayout.PrinterSettings = printSettings; } else { System.Drawing.Printing.PrinterSettings printerSettings = report.PaperLayout.PrinterSettings; printerSettings.PrinterName = printerName; } } |

Using the Sage Reporting Engine within a service

Using any library within a windows service deserves special attention due to the various restrictions of the running environment, the Sage reporting engine is no different.

- ExportFlags.SuppressUserInteraction: Everything in a service needs to run attended therefore it is necessary to instruct the reporting engine not to display prompts or message boxes. Further, service security in Vista and Server2008 was tightened so that any service attempting to display any UI element is terminated by Windows.

- Sage.Accounting.DataModel.dll : Copy Sage.Accounting.DataModel.dll located in C:Users<userid>AppDataLocalSageSage200AssemblyCache to your service’s bin directory. As the name suggests, the Sage.Accounting.DataModel.dll assembly contains the Sage200 data model that is dynamically built by the Sage server whenever a model extension is installed (for instance when you add a new view or table to the database and run through the Custom Model Builder).

- Use locally installed printers: Services don’t load user profiles (irrespective of the service’s user); therefore they don’t have access to network printers (which are loaded dynamically as part of the user profile).

Using the Sage Reporting Engine in a multi-threaded application

This section deals with the threading issues experienced when our application attempted to run multiple instances of the Sage reporting engine concurrently.

Having completed the initial report printing development we deployed this to a couple of sites which were running without issue.

Our problem came on a new site where each time our application attempted to print Sage200 forms concurrently we would encounter a range of exceptions. These exceptions would essentially terminate the execution of one of the concurrently running integrations.

Background

Our IMan application is multi-threaded, meaning that each time integration runs within a separate thread of a single multi-threaded apartment (MTA) executable.

To this point we had not experienced any threading related problems with IMan. Multiple integrations could be run concurrently without issue. Further, we could successfully run multiple integrations or threads concurrently where each could create transactions within Sage200.

Problem

Under the hood the first thing Sage does when creating a ReportingEngine object is make a call to DoEvents (this infers the Sage reporting engine is written in VB.Net).

Because this ultimately makes a call to the Windows messaging queue, any interactions with the UI or Messaging queue should come from a single thread (marked as a Single Threaded Apartment (STA)) from within an application.

Windows does not permit multiple threads within an application to access the messaging queue, with an exception being raised by windows where multiple threads attempt to do so.

Why is an STA Thread Required? (Simplified)

http://blogs.msdn.com/b/jfoscoding/archive/2005/04/07/406341.aspxA fuller discussion of COM and threading models.

http://msdn.microsoft.com/en-us/library/ms693344(VS.85).aspxThe error below is the one generated when multiple threads of the same application attempt to create ReportingEngine objects at the same time.

The error is pretty misleading, and at the time we thought that we were dealing with a linking or referencing issue with some Office components.

Given that we hadn’t had a threading issue previously and that we had suppressed user interaction (above), we were left scratching our heads for a while.

The clue came when we looked at the callstack more closely: DoEvents and FPushMessageLoop both indicate some UI interaction is occurring.

System.InvalidCastException: Unable to cast COM object of type ‘System.__ComObject’ to interface type ‘IMsoComponentManager’. This operation failed because the QueryInterface call on the COM component for the interface with IID ‘{000C0601-0000-0000-C000-000000000046}’ failed due to the following error: No such interface supported (Exception from HRESULT: 0x80004002 (E_NOINTERFACE)).at System.Windows.Forms.UnsafeNativeMethods.IMsoComponentManager.FPushMessageLoop(Int32 dwComponentID, Int32 uReason, Int32 pvLoopData)

at System.Windows.Forms.ComponentManagerProxy.System.Windows.Forms.UnsafeNativeMethods.IMsoComponentManager.FPushMessageLoop(Int32 dwComponentID, Int32 reason, Int32 pvLoopData)

at System.Windows.Forms.Application.ThreadContext.RunMessageLoopInner(Int32 reason, ApplicationContext context)

at System.Windows.Forms.Application.ThreadContext.RunMessageLoop(Int32 reason, ApplicationContext context)

at System.Windows.Forms.Application.DoEvents()

at Sage.Reporting.Engine.Integration.ReportingEngine..ctor()

at Realisable.Connectors.Sage200.ReportExporter..ctor(String reportFileName, Dictionary`2 criteria, String exportFilePath, Boolean print, String printerName)

Our requirements

- Retain the multi-threadedness of Iman: Changing our architecture to single threaded was a non-starter.

- In-process: We did consider writing a separate print spool application, but this suffered from two problems:

- Marshalling the print requests and response between two applications was considered too fragile and difficult.

- Sage200 permits only a single login per user at a time. A separate process would have required a second login and Sage user.

Solution

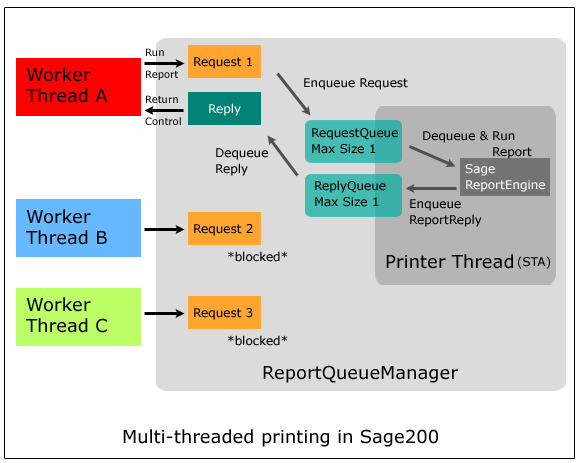

The solution is a variant of the synchronised singleton pattern (this is in Java, but the pattern applies), where a single thread (print thread) processes report requests singularly, one at a time. Each subsequent print request is blocked until the print thread completes the executing print request.

Implementation

ReportQueueManager

The ReportQueueManager marshals the print requests to the Sage reporting engine and is also responsible for printer thread running state. It contains 4 methods:

- RunReport: Called by the worker threads to process a print request.

- ProcessReport: The internal method which de-queues and processes each request.

- Start & Shutdown: Respectively manage the startup and shutdown of the printer thread.

Printer thread

Internal to the ReportQueueManager class is a single threaded apartment (STA) thread which is responsible for processing each print request.

Inter-thread communication/synchronisation

To communicate between the calling/worker thread and the printer thread are two blocking queues:

- Request Queue: Accepts print requests from the worker thread.

- Reply Queue: Returns the success/failure of a print request from the printer thread back to the calling/worker thread.

The request queue is the key component for synchronising calls between the worker and printer threads. The queue is set to permit only a single request at a time i.e. its maximum length is set to one. When the queue is full (it has a request), all further requests are blocked; i.e. their execution is halted, thus permitting only a single request to be processed at a time.

To achieve the blocking queue we used a pattern as described here.

ReportRequest

The ReportRequest object encapsulates all the criteria such as report name, report criteria/parameters, printer name, etc. for a print job.

A ReportRequest object is created by a worker thread for each print request. The worker thread calls ReportQueueManager.RunReport, if the queue is empty, the request is processed immediately. However, if the queue is not empty (meaning a report is being printed), the calling thread is blocked.

Communicating success/failure to the caller

To communicate back to the calling thread if the print operation has succeeded or failed, a ReportReply object is created and placed onto the reply queue.[/tet]

Execution is returned when the ReportQueueManager de-queues the reply object from the reply queue. The reply is checked for success or failure, and where there is failure, it re-throws the exception.Understanding the Sage200 logon context

Our solution meant that each print request could be run against different Sage companies/databases, so a critical component in our solution was to get ReportEngine to target the correct Sage company.

If you have ever dealt with the Sage reporting engine, you would have noticed that there are no properties or methods for authentication or for targeting a specific company/database. This is because the reporting engine uses the current thread’s security context/principal (System.Threading.Thread.CurrentPrincipal).

Per MSDN, a principal object represents the security context of the user on whose behalf the code is running, including that user’s identity (IIdentity) and any roles to which they belong.

Sage200 sets the CurrentPrincipal as part of the set operation of the Application.ActiveCompany property.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

// Authenticate to Sage200 _application.Connect("admin", "admin"); // Set the active company. for (int i = 0; i < _application.Companies.Count; i++) { if (_application.Companies[i].Name == "Sage200_2010_DemoData") { // When the ActiveCompany property is set Sage sets the // current thread's CurrentPrincipal property with logon // context. _application.ActiveCompany = _application.Companies[i]; break; } } |

The solution requires that the print thread’s CurrentPrincipal is set to the principal of the thread.

Since the reporting and print threads are separate it is necessary to pass the print thread to the worker’s thread’s principal for each print request.

|

1 2 3 4 5 6 7 8 9 |

{ public ReportRequest() { //Capture the Principal of the calling thread Principal = Thread.CurrentPrincipal; //Used for debug and tracing purposes. ThreadId = Thread.CurrentThread.ManagedThreadId; } .... |

We do this by capturing the principal in the constructor of the ReportRequest class. When the ReportQueueManager de-queues the request it sets the printer thread’s CurrentPrincipal to the Principal of the request object.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

/// <summary> /// Worker method to process ReportRequests /// </summary> private static void ProcessReport() { // Run forever! while (true) { // This method will block until a request is placed onto the queue. ReportRequest request = _requestQueue.Dequeue(); // Set this thread's principal to the principal of the request, // which the reporting engine then uses. Thread.CurrentPrincipal = request.Principal; |

The result allows each calling/worker thread to target a separate Sage200 company e.g. worker thread ‘A’ targets company ‘Y’ and worker thread ‘B’, company Z.

It is worth noting that without this the Sage ReportingEngine object will raise an exception when CurrentPrincipal is not set (ref 1).

Sage.Accounting.Application objects are not threadsafe

During testing we kept encountering a DuplicateKeyFound exception when setting the ActiveCompany property whenever two threads entered the method simultaneously. Our solution was to wrap the code for the connect and disconnect in a lock statement.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

// The ActiveCompany property setter is not threadsafe, // wrap the code around a lock to prevent 'DuplicateKeyFound' exception. // _syncLock is a static object declared at class level. lock (_syncLock) { // Authenticate to Sage200 _application.Connect("admin", "admin"); // Set the active company. for (int i = 0; i < _application.Companies.Count; i++) { if (_application.Companies[i].Name == "Sage200_2010_DemoData") { // When the ActiveCompany property is set Sage sets the // current thread's CurrentPrincipal property with logon // context. _application.ActiveCompany = _application.Companies[i]; break; } } |

Summary

We would like to thank the Sage200 development support for all their assistance, and ESPI and the client for their patience.

Download Sage200 Report Manager source code(Ref. 1) This is not entirely true! We found during testing that if we had Sage200 open and did not set the printer thread’s current principal the ReportEngine would somehow use the SAAPrincipal of the open application. An exception was thrown when Sage200 was not open.

File transfer

The old school method for data exchange has existed for as long as computers have interchanged data. File transfer involves one application creating a file and putting it in an agreed location, whilst the receiving application reads, processes and then does something with the file. Pros- Secure: File transfer can be easily locked down, making it a relatively secure means to exchange data. FTP and SSH are both solid protocols meaning remote exchange over the internet is secure.

- Difficult to audit: Compared with other mechanisms files typically don’t have any natural means to audit or to trace audit.

- Remote servers require an FTP/SSH solution to exchange files, adding another chink in the chain of events.

- Fragile: Easy to get out of sync where a failure can require significant manual intervention to re-establish normal operation.

- CSV and other column based formats such as fixed width text and Excel suffer from brittleness, where file schema modifications (field additions and deletions) require significant re-configuration on the receiving end.

- One transaction per file: It’s much easier to identify a particular transaction, and re-processing is easy should something go awry.

- For CSV and other column based formats, always add new fields to the end of the file; never remove existing ones, better still don’t use these formats!

- Use a resilient API based data format such as Xml or JSON. Do not go overboard however.

- Try to avoid Xml schema where possible. We feel schemas add unnecessary constraints to the format, where a substantial amount of the inherent flexibility of the format is lost. They add a level of complexity not required in the SME space.

- Always use a recognised API/library when consuming and producing Xml/JSON data. This will at minimum ensure the format is standard compliant. We’ve seen several instances where organisations (that should know better) provide us with improperly escaped Xml, simply because they weren’t using an API to produce the Xml.

- After files are processed move them to an archive directory; don’t delete.

Database & staging tables

Our preferred means for both integration and synchronisation, the use of databases & staging tables providing a much more robust transport as compared to file exchange. Staging tables are an intermediate set of database tables where the sender records pending data for the recipient(s) to later process. Pros- SQL: Provides vendor and format independence to query and update a database and table schema(s) changes are usually non-breaking.

- Audit and Traceability: SQL/Databases provide an easy means to record and query any key data required for auditing purposes e.g. timestamps, error & warning messages.

- Durability and Recoverability: Databases are naturally durable and far less vulnerable to corruption. In the event of a failure recovery can be made quickly assuming a backup is available.

- Security: Where interchange take place over the internet the database needs to be open to the outside world leading to potential attacks. These can be mitigated by restricting the connecting IP addresses, use of complex passwords, amongst others.

- Always have the following fields in a staging table:

- Status: Used to record whether a record is pending or processed. Always include an error status which allows you to identify erroneous transactions quickly. It is recommended that an index is setup on the status field to help speed querying.

- Last Modified Date: A timestamp to record when the last update was made.

- Last Update By: Used to record which application made the last update.

- Reason/error/message: Where there’s an error or a warning generated as part of the integration or synchronisation it should be recorded against the original record. This then gives you a robust audit trail which can be used later to trace problems.

- Foreign Record Id: Where a recipient application generates a transaction or record id (such as an Order, Invoice, or even Customer number) update the staging table with this reference.

- Backup frequently: Depending on the volume of data or the location of the database i.e. for high volume sites or where the database is publicly accessible this may be several times a day.

- Always update the staging table immediately after writing to the destination application.

- Where processing takes a long time, consider setting the status field to an intermediate value to prohibit other integration processes from processing the same data.

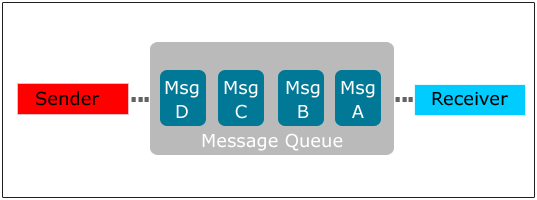

Message queue

A message queue is a transport level asynchronous protocol where a sender places a message into a queue until the receiver removes the message to process it. One of the key attributes of a message queue, especially for data synchronisation, is that messages are guaranteed to be delivered in FIFO order. Message queues have traditionally been limited to larger scale integration and synchronisation and where both sender and receiver exist on the same network. However, with the explosion of cloud computing and an agreed standard (AMQP), there is an increasing prevalence of low cost services:

Message queues have traditionally been limited to larger scale integration and synchronisation and where both sender and receiver exist on the same network. However, with the explosion of cloud computing and an agreed standard (AMQP), there is an increasing prevalence of low cost services:

- Windows Azure Service Bus

- Amazon SQS

- Storm MQ: Currrently in-beta this ‘free’ service looks promising.

- FIFO: Messages are guaranteed to be delivered in order.

- Robust and Transactional: Message queues can be backed up, and are naturally transactional.

- Message Queues are a Transport: The data format of the message still needs to be agreed between sender and recipient.

- Use a flexible data format such as Xml.

- File and database recovery is well known, message queues less so. Understand how to administer your message queue technology and how to recover on the event of a failure.

- Prevent message build up by having an application which is constantly listening for incoming messages.

- Use different queues for each data flow; don’t try to multiplex multiple message types onto a single queue.

No interchange

In this scenario there is no intermediate data form, instead each application holds its pending data until the recipient application retrieves it. Unless an application specifically integrates with another this style of interchange requires some form of middleware to mediate the interaction since:- Each application has its own interface i.e. webservice or a COM/Java/.Net API.

- The data usually requires some transformation for it to ‘fit’ with the other.

Summary

If a choice can be made, use databases/staging tables as they are by far the easiest and most robust strategy. We expect an increased use of message queues, especially with hosted infrastructure environments such as Azure and Amazon. CSV: Dear oh dear! It may be easy to produce and simple to read, interpret and load into Excel. But it’s 2013! And there are more sophisticated and better means for exchanging data, so why are we still using this archaic format?Data Structures

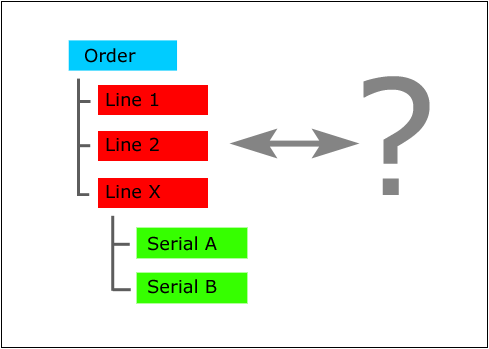

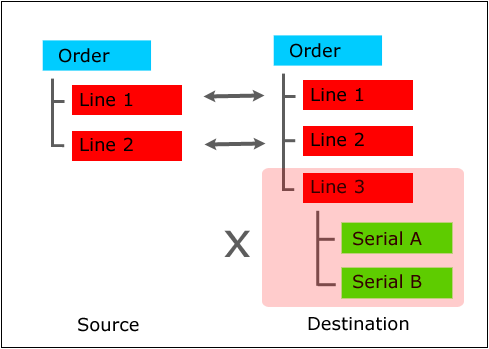

It is necessary to first understand the issues surrounding more complex data structures. Simple single level data structures, such as customer records, can be readily synchronised. However, as data structures become more complex so does the strategy. The above example is illustrative, both order and order lines must be synchronised; an order without lines is not an order. However, the third level of data – the serial–numbers add several complications:

The above example is illustrative, both order and order lines must be synchronised; an order without lines is not an order. However, the third level of data – the serial–numbers add several complications:

- Is it necessary to synchronise them? If they are used for transactional purposes, yes, but a view-only application may not require them.

- What do the serial numbers mean when an order line is updated? Are they a fresh set of serials, or just additions? While this question applies also to lines, the deeper the structure the more pronounced the issue becomes. This is due to the feedback of how the update is made on each level of data.

Means of Identifying Updates

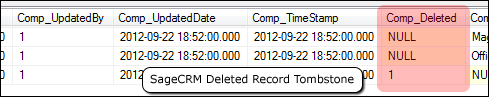

Inserting & Updating Records Inserts and updates follow a simple pattern where the destination application is queried to identify if it contains an existing record. An update is performed where a match is found; otherwise when no matches are found, an insert is performed. Deleted Records Handling deletions is a much harder issue, primarily as the delete needs to be identified. There are three approaches: Tombstoning Tombstoning is a technique which instead of actually deleting the record, marks it as deleted, via a field within the record’s table. What makes this a preferred method for handling deletes is that the tombstone makes the delete operation explicit and in turn reduces complexity around synchronisation. Tombstoning however requires the source application to provide such functionality (usually only a feature when data synchronisation/integration is integral to the application e.g. CRM).

For applications that don’t support tombstoning, it is necessary to record the record deletion(s) in a separate interface table or file, usually by way of a trigger (to be discussed in part 3 of this blog).

Record Comparison

Record comparison involves comparing a set of data from the source application to the set of data in the destination application. A delete is performed when a record is found in the destination but not in the source.

Tombstoning however requires the source application to provide such functionality (usually only a feature when data synchronisation/integration is integral to the application e.g. CRM).

For applications that don’t support tombstoning, it is necessary to record the record deletion(s) in a separate interface table or file, usually by way of a trigger (to be discussed in part 3 of this blog).

Record Comparison

Record comparison involves comparing a set of data from the source application to the set of data in the destination application. A delete is performed when a record is found in the destination but not in the source.

In contrast to ‘Tombstoning’ this suffers from three primary problems:

In contrast to ‘Tombstoning’ this suffers from three primary problems:

- Complex: Record comparison is implicit; it requires the ‘data synchroniser’ to determine if a record has been deleted.

- Auditing: There is a lack of an audit trail where records just disappear, with no facility to check why/when a deletion occurred.

- OK for child records not for the primary entity: Using comparison for child records in hierarchical structure, e.g. order lines within an order, can be appropriate where there are limited records and the detection of deleted records is cheap and relatively straight forward.

- Data is loaded at a database level e.g. reporting & data warehouses and CRMs. Applications with a sophisticated data structure and/or API usually frown on this approach as business logic and data validation is side-stepped and can lead to integrity issues.

- The destination application is view only and non-transactional.

- There are no (or very limited) foreign-key constraints: Foreign keys enforce relationships between records. When a record is deleted so must all the associated records. For example, deleting a customer record also requires deleting all the customer’s associated order & invoice records.

- Data size: Deleting and re-inserting all records in a large dataset may take a long time to complete and can be expensive in terms of computing power, particularly with hosted environments where there can be CPU cycle costs. Pruning the dataset, usually by date, is an effective strategy to limit the load size.

Handling Application Logic

When synchronising data between applications it is necessary to negotiate and handle the restrictions or logic the API applies to the data.- Business logic is much stricter than any database enforcement.

- Transactional data moves through a process, where the status of the transaction (or line) dictates whether modifications can be made.

- Deletes are never easy – Particularly with ERP applications, deletes are handled in a number of ways: master items may need to be marked as inactive (a form of tombstoning); transactions can either be deleted or cancelled.

- Atomicity: An update should be all or nothing; never have a situation where some updates to a single transaction or master item fail and some succeed. Whilst this should apply whether synchronising at data level or application level, it’s easier to fall into a situation where a partial update is made due to lack of understanding of the API.

- Is your synchronisation dumb or smart: You will need to make a choice as to how much logic you embed into your synchronisation, and often they start dumb and evolve to smart. What this means is the amount of logic required to cover certain scenarios or use cases. At the stupid end, there is no logic and a simply try/catch block is placed around your processing to catch any exceptions. Conversely, at the smart end you may check for an entity’s existence, raise workflow events, or handle specific edge cases.

Summary

This post hopefully has helped to explain the most common synchronisation strategies and pitfalls. It is by no means complete and we would like to hear your opinions. The next post will discuss the various means i.e. file transfer, database, etc. available for data synchronisation.Master Data

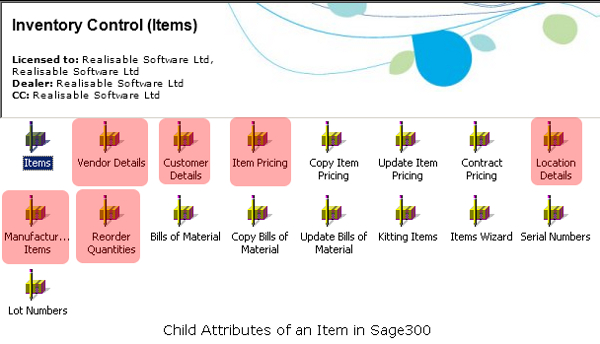

Master Data is conceptualised as key entities used within an organisation. Customers, Employees, Items & Suppliers are all considered master data. At the simple end of synchronising, Master Data records involve a single record of data per entity. However, Master Data often has a number of child attributes such as addresses for customers, bank details for employees, and a range of attributes such as locations, suppliers and units of measure for items. It is increasingly expected that Master Data be updatable from multiple applications where each application holds its own copy of a common ‘truth’.

It is increasingly expected that Master Data be updatable from multiple applications where each application holds its own copy of a common ‘truth’.

Transactional Data

Whilst sometimes more complex due to their header/detail structure transactions, they are usually easier to synchronise due to the following characteristics:- Simple direction/flow – One application is the master and the other(s) are slaves thus eliminating issues such as conflicts.

- Transactions are less likely to be updated – Once a transaction is posted it has reached its final state and requires only a single push to the target application. There are however a couple of notable exceptions: Sales & Purchase Orders often need to be synchronised to reflect their current ‘state’ and because of their more complex structure and heavy business logic require careful attention. Further, Customer & Supplier Payments can have a complex many-to-many interaction with their corresponding invoice documents.

Issues Surrounding Synchronisation

Data Structure Complexity – More complex data involving header, details, sub-details, etc need strategies which address changes for each ‘level’ of data. Synchronisation & Conflict Resolution- Synchronisation needs to be considered at each level of data.

- Deciding which application, if any, is the master or whether bi-directional integration will be supported.

- Conflict Resolution – What rules should be followed where there is a conflict and is there sufficient data available to resolve a conflict and/or data storage to mediate conflicts?

- Field Level Transformation – Destructive transformation, where the result of a derived or calculated field cannot be applied in reverse or accurately reversed can prevent bi-directional synchronisation.

- Field Lengths and Type Differences – Two common issues arise: truncation of textual fields such as name, addresses & comments, and the mapping of enumerated fields i.e. drop down fields, where the choice is identified as an integer value within the source or target application.

- Data Structure Mapping – Cases where the structure of the source data does not match the target and where transformation is required to massage the data into the required shape.